Create Dask Dataframes

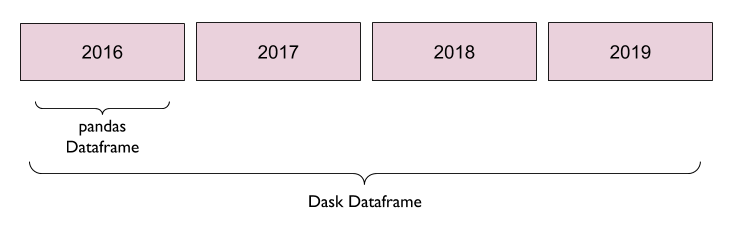

A Dask Dataframe contains a number of pandas Dataframes, which are distributed across your cluster. The API to interact with these objects is very much like the pandas API.

This example assumes you have a basic understanding of Dask concepts. If you need more information about that, visit our Dask Concepts documentation first.

For more reference about Dask Dataframes, see the official Dask documentation.

From CSV on Disk

dask.dataframe.read_csv('filepath.csv')

For more details, see our page about loading data from flat files.

From pandas Dataframe

dask.dataframe.from_pandas(pandas_df)

For more details about adapting from pandas to Dask, see our tutorial.

From parquet

dask.dataframe.read_parquet('s3://bucket/my-parquet-data')

From JSON

This adopts the behavior of pandas.read_json().

dask.dataframe.read_json()

There are many other ways to read in data as Dask Dataframes as well. Dask official documentation gives thorough details of the Dask Dataframes API, if you have further questions!

Need help, or have more questions? Contact us at:

- support@saturncloud.io

- On Intercom, using the icon at the bottom right corner of the screen

We'll be happy to help you and answer your questions!